About Me

I’m a research scientist working on multimodal foundation models, with applied experience in large-scale multimedia dataset collection and filtering, pretraining, and post training. My focus is on efficient model refinement, use-case dependent benchmarks/validation, data quality, and lowrank model personalization. Collaborative innovation that iterates quickly while still respecting ethical considerations is the way.

TL;DR

Data Provenance Initiative (DPI)

Co-Lead

Large-scale audits of the multimodal datasets that power SOTA AI models

M.Sc., Boston University

Electrical & Computer Engineering

Deep Learning, Data Analytics

B.Sc., University of California, Los Angeles

Microbiology, Immunology, & Molecular Genetics

Download CV

Recent News

November 2024

Research presentation for Women in AI & Robotics.

October 2024

Research presentation for AI Tinkerers x Human Feedback Foundation.

September 2024

The Rapid Decline of the AI Data Commons is accepted to NeurIPS 2024.

August 2024

Platypus models collectively surpass 1M+ downloads on HuggingFace!

July 2024

DPI’s recent work covered by New York Times, 404, Vox, Yahoo! Finance, Variety.

March 2024

Joined the Data Provenance Intitive as a project lead!

November 2023

Platypus accepted to NeurIPS 2023 Workshop on Instruction Tuning & Following.

October 2023

Guest Lecturer @ HKUST, LLMOps with Prof. Sung Kim.

September 2023

Joined Raive as a Founding Research Scientist.

Selected Publications

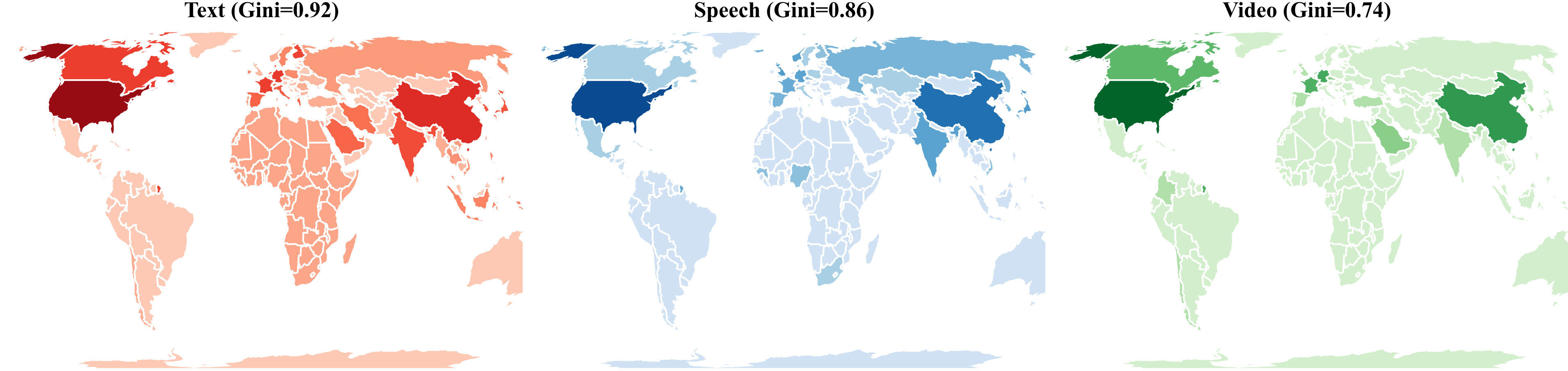

Bridging the Data Provenance Gap Across Text, Speech, and Video

Shayne Longpre, … (23 authors), Ariel N. Lee, … (15 authors), Stella Biderman, Alex Pentland, Sara Hooker, Jad Kabbara (2024)

Under Submission

Addressing the challenges of data provenance across different modalities, including text, speech, and video, proposing solutions to bridge the existing gaps.

Consent in Crisis: The Rapid Decline of the AI Data Commons

Shayne Longpre, Robert Mahari, Ariel N. Lee, … (45 authors), Sara Hooker, Jad Kabbara, Sandy Pentland (2024)

NeurIPS 2024 Datasets and Benchmarks Track

Analysis of 14,000+ web domains to understand evolving access restrictions in AI.

Platypus: Quick, Cheap, and Powerful Refinement of LLMs

Ariel N. Lee, Cole Hunter, Nataniel Ruiz (aka garage-bAInd)

NeurIPS 2023 Workshop on Instruction Tuning and Instruction Following

Developed open-source LLMs with 1M+ downloads on HuggingFace through data refinement, leading post-trained models at time of release.

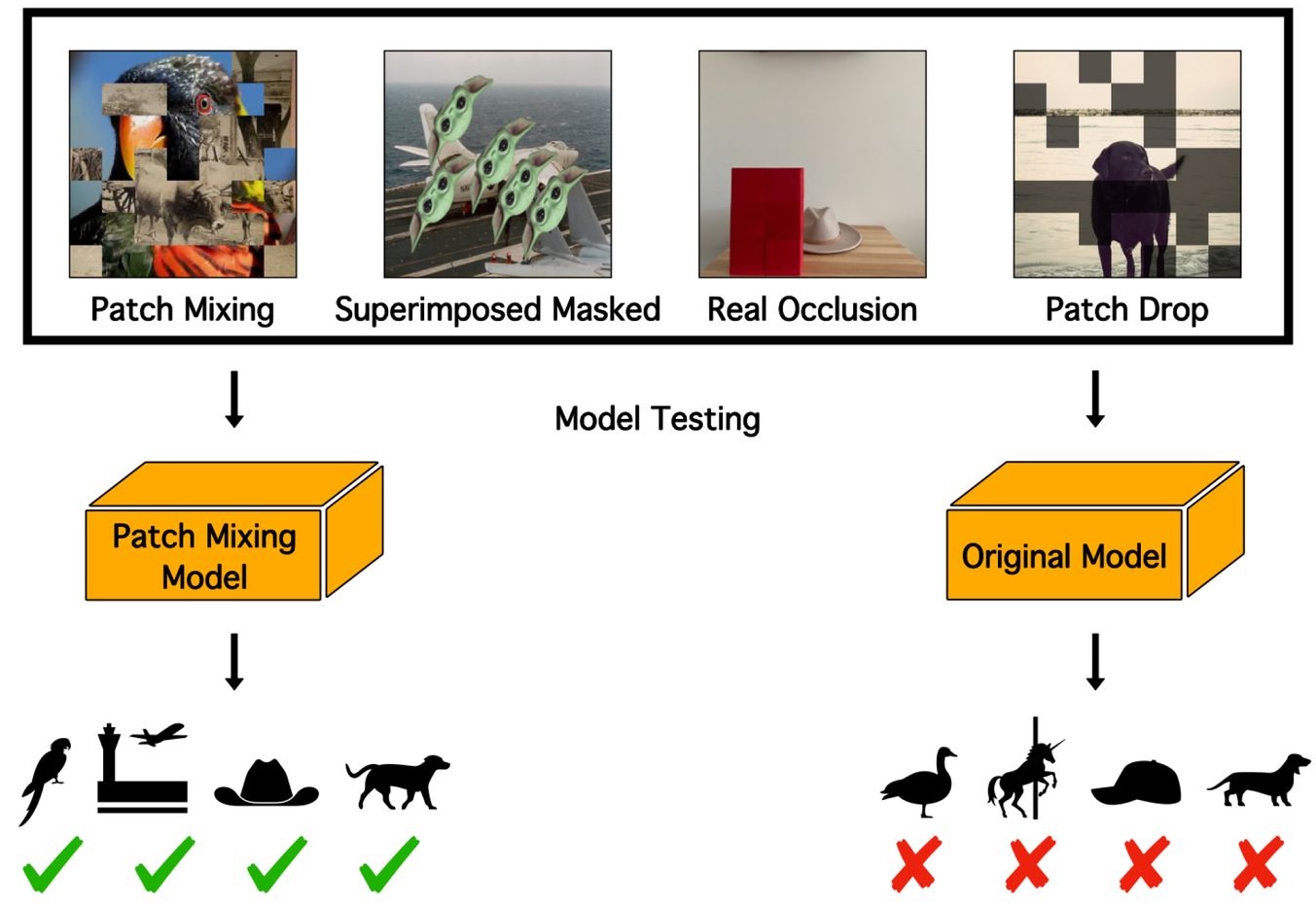

Hardwiring ViT Patch Selectivity into CNNs using Patch Mixing

Ariel N. Lee, Sarah Adel Bargal, Janavi Kasera, Stan Sclaroff, Kate Saenko, Nataniel Ruiz

Created 2 new datasets and developed data augmentation method for CNNs to simulate ViT patch selectivity, improving model robustness to occlusions.

Projects & Competitions

Meta AI Video Similarity Competition

8th overall (196 participants) | 1st in AI graduate course challenge (42 participants)

Used a pretrained Self-Supervised Descriptor for Copy Detection model to find manipulated videos in a dataset of 40,000+ videos.

Leveraging Fine-tuned Models for Prompt Prediction

AI research project and Kaggle competition for predicting text prompts of generated images using an ensemble of models, including CLIP, BLIP, and ViT.

Custom, high-quality dataset of 100,000+ generated images, cleaned to have low semantic similarity.

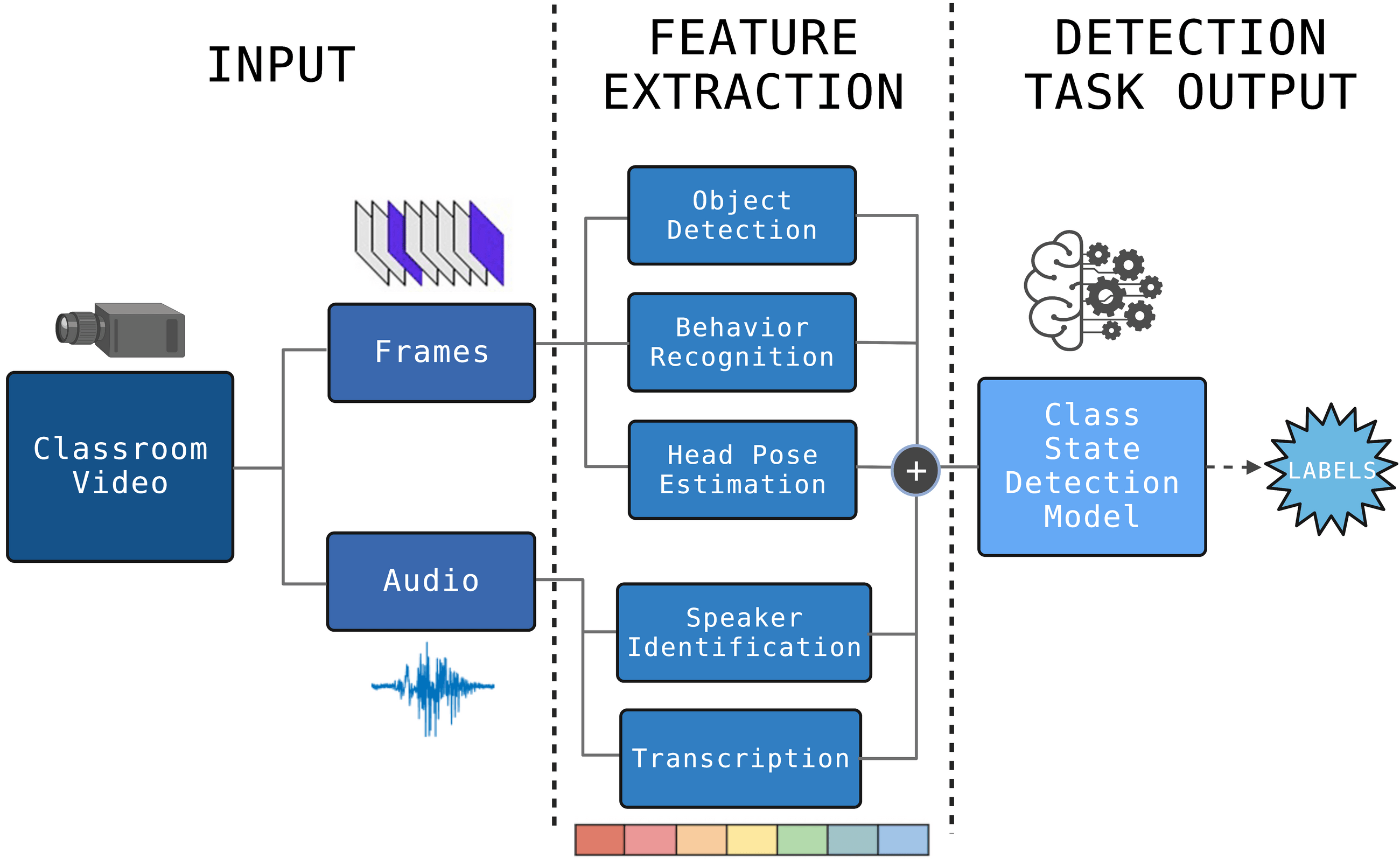

BU Wheelock Educational Policy Center: Analyzing Classroom Time

MLOps Development Team | Data & Process Engineer

Partnered with TeachForward and Wheelock Educational Policy Center to develop a feature extraction pipeline, analyzing the use of teaching time based on 10,000+ videos of classroom observations. Created a simple user interface for client using gradio and HuggingFace spaces.